Premium Only Content

EfficientZero: Mastering Atari Games with Limited Data (Machine Learning Research Paper Explained)

#efficientzero #muzero #atari

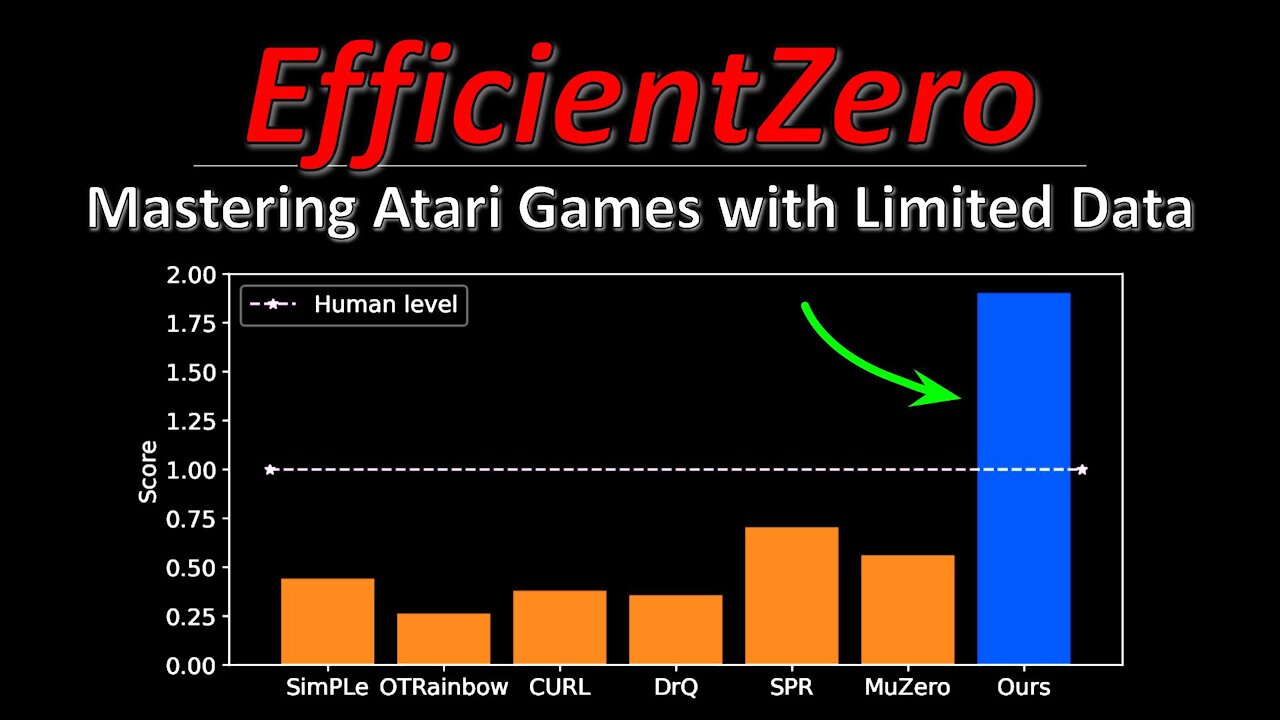

Reinforcement Learning methods are notoriously data-hungry. Notably, MuZero learns a latent world model just from scalar feedback of reward- and policy-predictions, and therefore relies on scale to perform well. However, most RL algorithms fail when presented with very little data. EfficientZero makes several improvements over MuZero that allows it to learn from astonishingly small amounts of data and outperform other methods by a large margin in the low-sample setting. This could be a staple algorithm for future RL research.

OUTLINE:

0:00 - Intro & Outline

2:30 - MuZero Recap

10:50 - EfficientZero improvements

14:15 - Self-Supervised consistency loss

17:50 - End-to-end prediction of the value prefix

20:40 - Model-based off-policy correction

25:45 - Experimental Results & Conclusion

Paper: https://arxiv.org/abs/2111.00210

Code: https://github.com/YeWR/EfficientZero

Note: code not there yet as of release of this video

Abstract:

Reinforcement learning has achieved great success in many applications. However, sample efficiency remains a key challenge, with prominent methods requiring millions (or even billions) of environment steps to train. Recently, there has been significant progress in sample efficient image-based RL algorithms; however, consistent human-level performance on the Atari game benchmark remains an elusive goal. We propose a sample efficient model-based visual RL algorithm built on MuZero, which we name EfficientZero. Our method achieves 190.4% mean human performance and 116.0% median performance on the Atari 100k benchmark with only two hours of real-time game experience and outperforms the state SAC in some tasks on the DMControl 100k benchmark. This is the first time an algorithm achieves super-human performance on Atari games with such little data. EfficientZero's performance is also close to DQN's performance at 200 million frames while we consume 500 times less data. EfficientZero's low sample complexity and high performance can bring RL closer to real-world applicability. We implement our algorithm in an easy-to-understand manner and it is available at this https URL. We hope it will accelerate the research of MCTS-based RL algorithms in the wider community.

Authors: Weirui Ye, Shaohuai Liu, Thanard Kurutach, Pieter Abbeel, Yang Gao

Links:

TabNine Code Completion (Referral): http://bit.ly/tabnine-yannick

YouTube: https://www.youtube.com/c/yannickilcher

Twitter: https://twitter.com/ykilcher

Discord: https://discord.gg/4H8xxDF

BitChute: https://www.bitchute.com/channel/yann...

Minds: https://www.minds.com/ykilcher

Parler: https://parler.com/profile/YannicKilcher

LinkedIn: https://www.linkedin.com/in/ykilcher

BiliBili: https://space.bilibili.com/1824646584

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: https://www.subscribestar.com/yannick...

Patreon: https://www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

-

![🔴[LIVE TRADING] Bounce or Bust?! || The MK Show](https://1a-1791.com/video/fwe2/ad/s8/1/c/n/q/f/cnqfy.0kob-small-The-MK-Show-Feb.-24th.jpg) LIVE

LIVE

Matt Kohrs

13 hours ago🔴[LIVE TRADING] Bounce or Bust?! || The MK Show

1,639 watching -

37:11

37:11

BonginoReport

4 hours agoDan Bongino is Leaving (Ep.146) - 02/24/2025

145K352 -

LIVE

LIVE

Wendy Bell Radio

5 hours agoThe MAGA Diet

13,941 watching -

DVR

DVR

Graham Allen

2 hours agoGRAHAM MAKES YUGE ANNOUNCEMENT!! + LIBERAL REP ROOTING AGAINST AMERICA?!

50.5K44 -

Randi Hipper

1 hour agoETHEREUM PRICE BOUNCES AFTER BILLION DOLLAR HACK!

8.7K1 -

![Massive Paradigm Shift: Bongino Hired At FBI; Joy Reid Fired At MSBNC [EP 4450-8AM]](https://1a-1791.com/video/fwe1/52/s8/1/u/2/_/e/u2_ey.0kob-small-Massive-Paradigm-Shift-Bong.jpg) LIVE

LIVE

The Pete Santilli Show

15 hours agoMassive Paradigm Shift: Bongino Hired At FBI; Joy Reid Fired At MSBNC [EP 4450-8AM]

2,360 watching -

1:27:17

1:27:17

Game On!

14 hours ago $2.71 earnedAnother Monday without football...

36.8K8 -

Jeff Ahern

2 hours agoMonday Madness with Jeff Ahern (Ding Dong the Witch is Gone!)

29.4K2 -

34:56

34:56

Athlete & Artist Show

22 hours ago $1.61 earnedCANADA WINS GOLD AGAIN!!

25.8K2 -

15:27

15:27

T-SPLY

1 day agoCNN Forgets President Trump Can Fire Anyone He Wants From The Pentagon

36.9K22