Premium Only Content

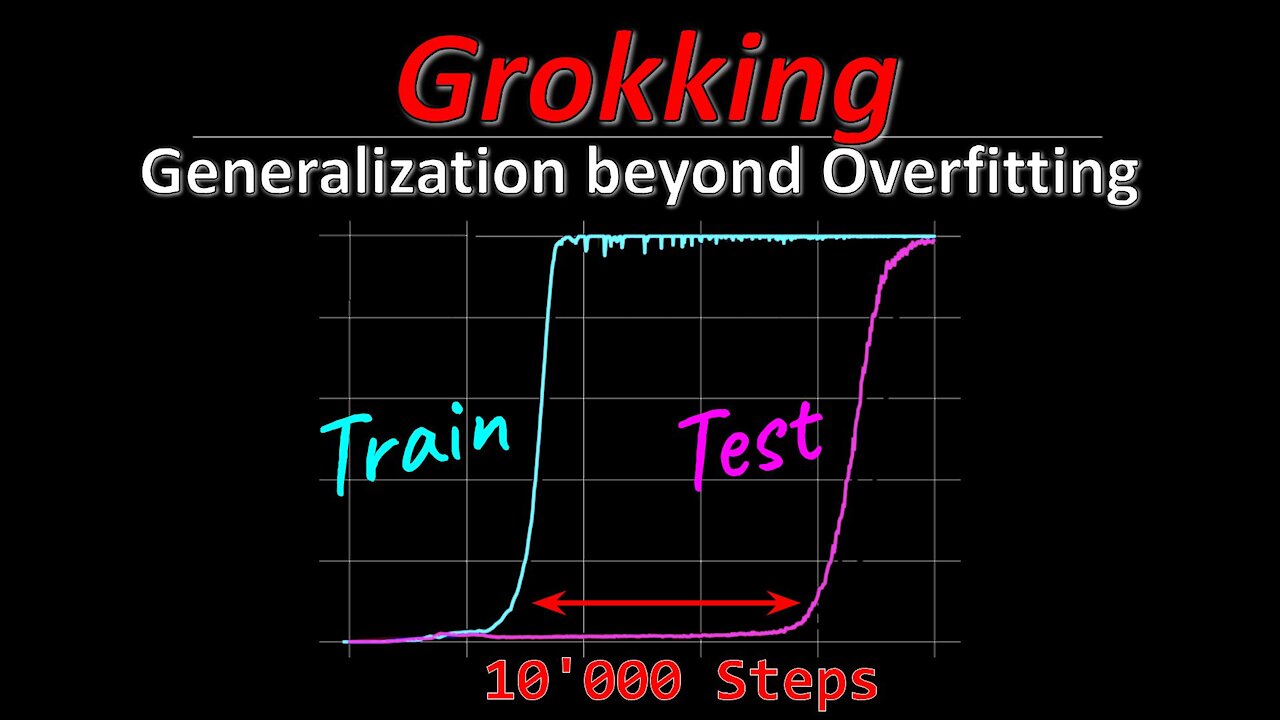

Grokking: Generalization beyond Overfitting on small algorithmic datasets (Paper Explained)

#grokking #openai #deeplearning

Grokking is a phenomenon when a neural network suddenly learns a pattern in the dataset and jumps from random chance generalization to perfect generalization very suddenly. This paper demonstrates grokking on small algorithmic datasets where a network has to fill in binary tables. Interestingly, the learned latent spaces show an emergence of the underlying binary operations that the data were created with.

OUTLINE:

0:00 - Intro & Overview

1:40 - The Grokking Phenomenon

3:50 - Related: Double Descent

7:50 - Binary Operations Datasets

11:45 - What quantities influence grokking?

15:40 - Learned Emerging Structure

17:35 - The role of smoothness

21:30 - Simple explanations win

24:30 - Why does weight decay encourage simplicity?

26:40 - Appendix

28:55 - Conclusion & Comments

Paper: https://mathai-iclr.github.io/papers/...

Abstract:

In this paper we propose to study generalization of neural networks on small algorithmically generated datasets. In this setting, questions about data efficiency, memorization, generalization, and speed of learning can be studied in great detail. In some situations we show that neural networks learn through a process of “grokking” a pattern in the data, improving generalization performance from random chance level to perfect generalization, and that this improvement in generalization can happen well past the point of overfitting. We also study generalization as a function of dataset size and find that smaller datasets require increasing amounts of optimization for generalization. We argue that these datasets provide a fertile ground for studying a poorly understood aspect of deep learning: generalization of overparametrized neural networks beyond memorization of the finite training dataset.

Authors: Alethea Power, Yuri Burda, Harri Edwards, Igor Babuschkin & Vedant Misra

Links:

TabNine Code Completion (Referral): http://bit.ly/tabnine-yannick

YouTube: https://www.youtube.com/c/yannickilcher

Twitter: https://twitter.com/ykilcher

Discord: https://discord.gg/4H8xxDF

BitChute: https://www.bitchute.com/channel/yann...

Minds: https://www.minds.com/ykilcher

Parler: https://parler.com/profile/YannicKilcher

LinkedIn: https://www.linkedin.com/in/ykilcher

BiliBili: https://space.bilibili.com/1824646584

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: https://www.subscribestar.com/yannick...

Patreon: https://www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

-

25:59

25:59

ykilcher

3 years agoInconsistency in Conference Peer Review: Revisiting the 2014 NeurIPS Experiment (Paper Explained)

47 -

0:18

0:18

North_of_49th_Parallel

3 years ago $0.01 earnedBeyond Van Gogh

92 -

2:17

2:17

WTMJMilwaukee

3 years agoBeauty beyond the course

19 -

0:06

0:06

Ren0770

3 years agoSmall kitten

211 -

1:25

1:25

modconspiracy

3 years agoSmall Fishes

157 -

20:11

20:11

Candace Show Podcast

11 hours agoBecoming Brigitte: Candace Owens x Xavier Poussard | Ep 6

193K330 -

8:25:38

8:25:38

Dr Disrespect

16 hours ago🔴LIVE - DR DISRESPECT - ELDEN RING DLC - REVENGE

194K22 -

54:22

54:22

LFA TV

1 day agoThe End of the Trans-Atlantic Alliance | TRUMPET DAILY 2.17.25 7PM

50.2K7 -

55:56

55:56

BIG NEM

15 hours agoUGLY COCO: The Rapper Who’s Tried EVERY PSYCHEDELIC 🌌

22.6K1 -

1:42:51

1:42:51

2 MIKES LIVE

12 hours ago2 MIKES LIVE #181 Deep Dive Monday!

26.8K3