Premium Only Content

This video is only available to Rumble Premium subscribers. Subscribe to

enjoy exclusive content and ad-free viewing.

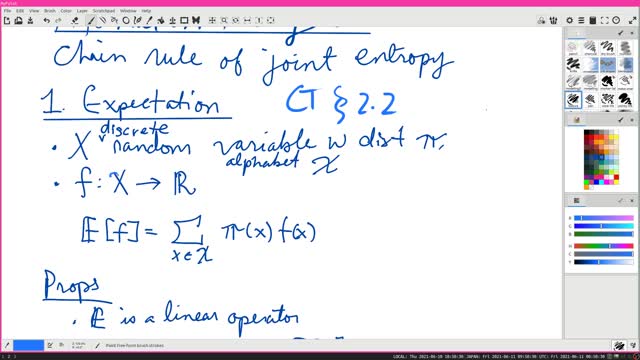

Chain Rule of Joint Entropy | Information Theory 5 | Cover-Thomas Section 2.2

3 years ago

15

H(X, Y) = H(X) + H(Y | X). In other words, the entropy (= uncertainty) of two variables is the entropy of one, plus the conditional entropy of the other. In particular, if the variables are independent, then the uncertainty of two independent variables is the sum of the two uncertainties.

#InformationTheory #CoverThomas

Loading comments...

-

57:16

57:16

Calculus Lectures

4 years agoMath4A Lecture Overview MAlbert CH3 | 6 Chain Rule

66 -

2:40

2:40

KGTV

3 years agoPatient information compromised

261 -

DVR

DVR

In The Litter Box w/ Jewels & Catturd

21 hours agoYOU'RE FIRED! | In the Litter Box w/ Jewels & Catturd – Ep. 747 – 2/21/2025

13.4K15 -

LIVE

LIVE

Revenge of the Cis

1 hour agoLocals Movie Riff: Soul Plane

418 watching -

40:04

40:04

SLS - Street League Skateboarding

1 month agoThese 2 Women Dominated 2024! Best of Rayssa Leal & Chloe Covell 🏆

13.3K2 -

1:48:12

1:48:12

The Quartering

5 hours agoElon Musk Waves a Chainsaw at CPAC, JD Vance SLAMS Illegal Immigration, and more

75.5K20 -

45:20

45:20

Rethinking the Dollar

1 hour agoGolden Opportunity: Trump's Noise Has Been Great For Gold But....

4.1K4 -

1:02:04

1:02:04

Ben Shapiro

3 hours agoEp. 2143 - The True Faces Of Evil

56.8K78 -

1:26:19

1:26:19

Game On!

3 hours agoSports Betting Weekend Preview with Crick's Corner!

13.4K1 -

30:45

30:45

CatfishedOnline

3 hours agoMan Plans To Marry His Military Girlfriend Or Romance Scam?

15K1