Premium Only Content

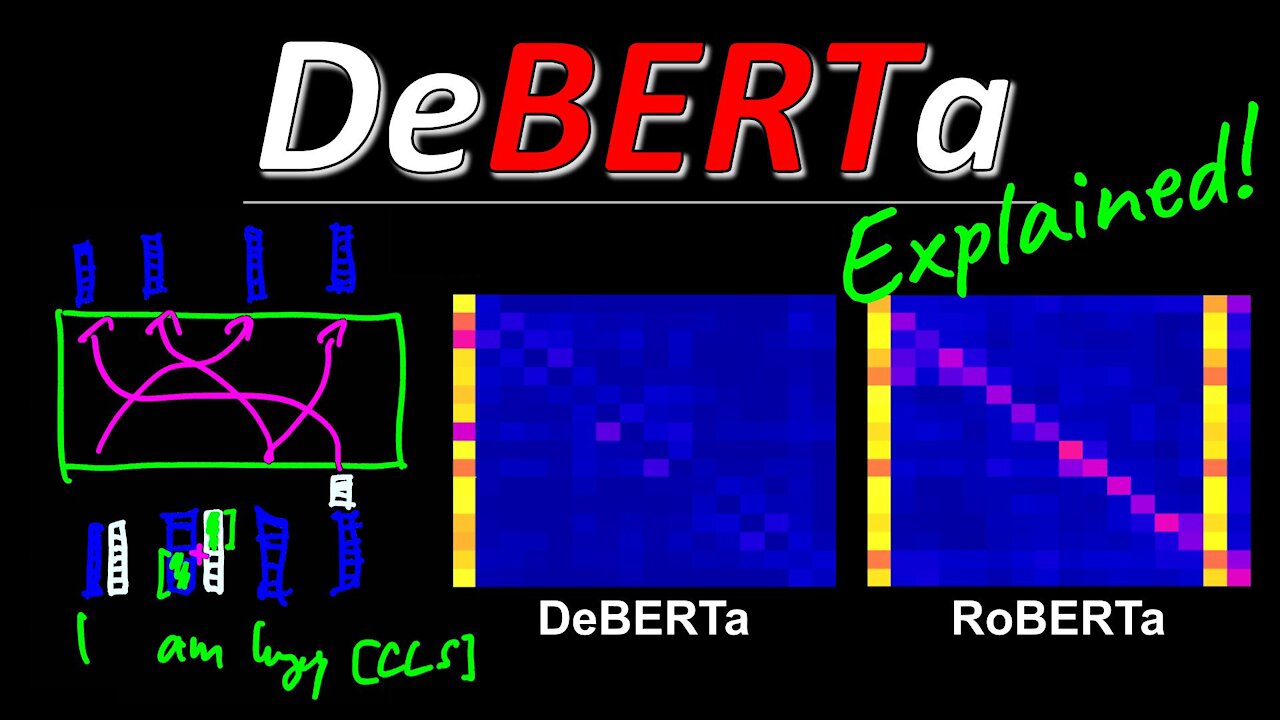

DeBERTa: Decoding-enhanced BERT with Disentangled Attention (Machine Learning Paper Explained)

#deberta #bert #huggingface

DeBERTa by Microsoft is the next iteration of BERT-style Self-Attention Transformer models, surpassing RoBERTa in State-of-the-art in multiple NLP tasks. DeBERTa brings two key improvements: First, they treat content and position information separately in a new form of disentangled attention mechanism. Second, they resort to relative positional encodings throughout the base of the transformer, and provide absolute positional encodings only at the very end. The resulting model is both more accurate on downstream tasks and needs less pretraining steps to reach good accuracy. Models are also available in Huggingface and on Github.

OUTLINE:

0:00 - Intro & Overview

2:15 - Position Encodings in Transformer's Attention Mechanism

9:55 - Disentangling Content & Position Information in Attention

21:35 - Disentangled Query & Key construction in the Attention Formula

25:50 - Efficient Relative Position Encodings

28:40 - Enhanced Mask Decoder using Absolute Position Encodings

35:30 - My Criticism of EMD

38:05 - Experimental Results

40:30 - Scaling up to 1.5 Billion Parameters

44:20 - Conclusion & Comments

Paper: https://arxiv.org/abs/2006.03654

Code: https://github.com/microsoft/DeBERTa

Huggingface models: https://huggingface.co/models?search=deberta

Abstract:

Recent progress in pre-trained neural language models has significantly improved the performance of many natural language processing (NLP) tasks. In this paper we propose a new model architecture DeBERTa (Decoding-enhanced BERT with disentangled attention) that improves the BERT and RoBERTa models using two novel techniques. The first is the disentangled attention mechanism, where each word is represented using two vectors that encode its content and position, respectively, and the attention weights among words are computed using disentangled matrices on their contents and relative positions, respectively. Second, an enhanced mask decoder is used to incorporate absolute positions in the decoding layer to predict the masked tokens in model pre-training. In addition, a new virtual adversarial training method is used for fine-tuning to improve models' generalization. We show that these techniques significantly improve the efficiency of model pre-training and the performance of both natural language understanding (NLU) and natural langauge generation (NLG) downstream tasks. Compared to RoBERTa-Large, a DeBERTa model trained on half of the training data performs consistently better on a wide range of NLP tasks, achieving improvements on MNLI by +0.9% (90.2% vs. 91.1%), on SQuAD v2.0 by +2.3% (88.4% vs. 90.7%) and RACE by +3.6% (83.2% vs. 86.8%). Notably, we scale up DeBERTa by training a larger version that consists of 48 Transform layers with 1.5 billion parameters. The significant performance boost makes the single DeBERTa model surpass the human performance on the SuperGLUE benchmark (Wang et al., 2019a) for the first time in terms of macro-average score (89.9 versus 89.8), and the ensemble DeBERTa model sits atop the SuperGLUE leaderboard as of January 6, 2021, out performing the human baseline by a decent margin (90.3 versus 89.8).

Authors: Pengcheng He, Xiaodong Liu, Jianfeng Gao, Weizhu Chen

Links:

TabNine Code Completion (Referral): http://bit.ly/tabnine-yannick

YouTube: https://www.youtube.com/c/yannickilcher

Twitter: https://twitter.com/ykilcher

Discord: https://discord.gg/4H8xxDF

BitChute: https://www.bitchute.com/channel/yannic-kilcher

Minds: https://www.minds.com/ykilcher

Parler: https://parler.com/profile/YannicKilcher

LinkedIn: https://www.linkedin.com/in/yannic-kilcher-488534136/

BiliBili: https://space.bilibili.com/1824646584

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: https://www.subscribestar.com/yannickilcher

Patreon: https://www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

-

3:40

3:40

TheFanMan

3 years agoShredding paper with a fan!

821 -

4:31

4:31

AretonLTD

3 years agokick start construction learning with Areton-ltd

49 -

0:35

0:35

SDelaney

3 years agoLearning to Walk with Lucy

21 -

1:39

1:39

KSHB

3 years agoKCPS moves forward with in-person learning plan

31 -

8:43

8:43

Milon Shil

3 years agoHow to make a bird with paper? Paper crafts

55 -

0:36

0:36

KTNV

3 years agoStudents make strides with in-person learning

8 -

18:26

18:26

Official Channel of Danny Steyne

4 years ago $0.01 earnedLEARNING TO LIVE WITH UNFULFILLED PROMISES

75 -

9:48

9:48

The Texas Dano

3 years ago $0.02 earnedA Problem with Distance Learning

180 -

4:26

4:26

KSHB

3 years agoLearning with Lindsey: Cold weather experiments

11 -

21:08

21:08

Clownfish TV

1 day agoElon Musk Tells WotC to BURN IN HELL for Erasing Gary Gygax from DnD!

44.9K15