Premium Only Content

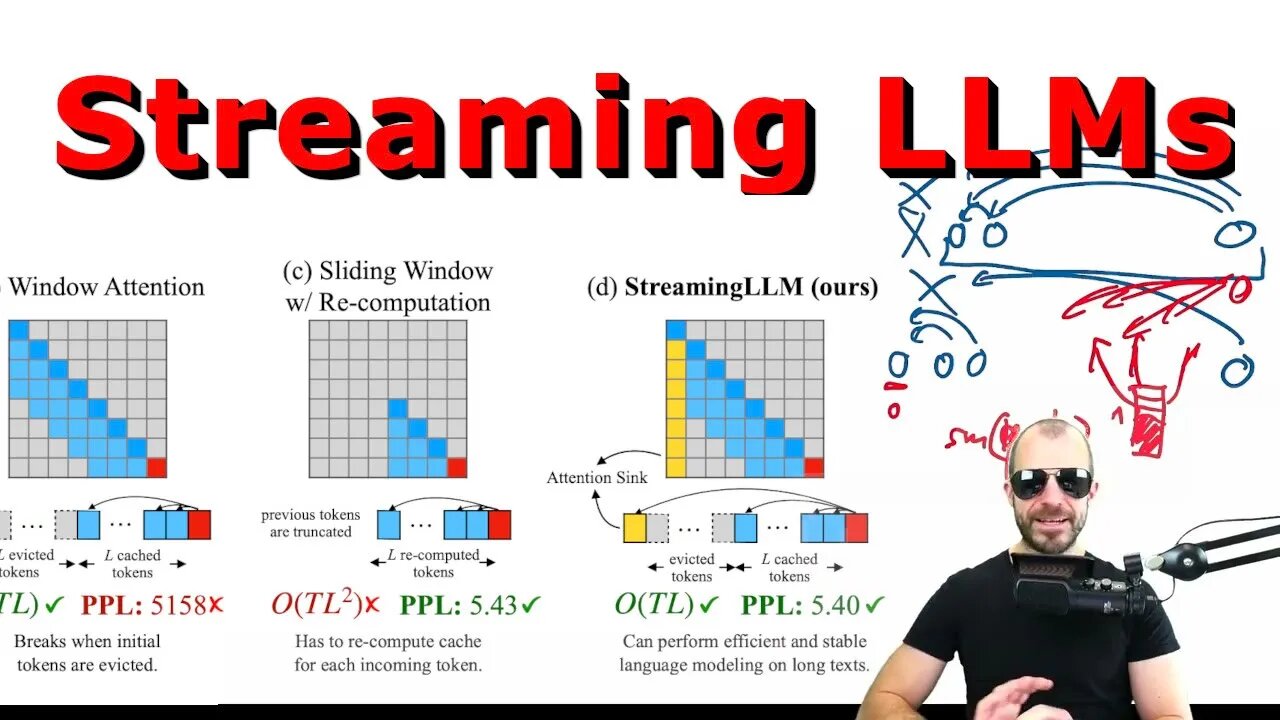

Efficient Streaming Language Models with Attention Sinks (Paper Explained)

#llm #ai #chatgpt

How does one run inference for a generative autoregressive language model that has been trained with a fixed context size? Streaming LLMs combine the performance of windowed attention, but avoid the drop in performance by using attention sinks - an interesting phenomenon where the token at position 0 acts as an absorber of "extra" attention.

OUTLINE:

0:00 - Introduction

1:20 - What is the problem?

10:30 - The hypothesis: Attention Sinks

15:10 - Experimental evidence

18:45 - Streaming LLMs

20:45 - Semantics or position?

22:30 - Can attention sinks be learned?

27:45 - More experiments

30:10 - Comparison to Big Bird

Paper: https://arxiv.org/abs/2309.17453

Abstract:

Deploying Large Language Models (LLMs) in streaming applications such as multi-round dialogue, where long interactions are expected, is urgently needed but poses two major challenges. Firstly, during the decoding stage, caching previous tokens' Key and Value states (KV) consumes extensive memory. Secondly, popular LLMs cannot generalize to longer texts than the training sequence length. Window attention, where only the most recent KVs are cached, is a natural approach -- but we show that it fails when the text length surpasses the cache size. We observe an interesting phenomenon, namely attention sink, that keeping the KV of initial tokens will largely recover the performance of window attention. In this paper, we first demonstrate that the emergence of attention sink is due to the strong attention scores towards initial tokens as a ``sink'' even if they are not semantically important. Based on the above analysis, we introduce StreamingLLM, an efficient framework that enables LLMs trained with a finite length attention window to generalize to infinite sequence lengths without any fine-tuning. We show that StreamingLLM can enable Llama-2, MPT, Falcon, and Pythia to perform stable and efficient language modeling with up to 4 million tokens and more. In addition, we discover that adding a placeholder token as a dedicated attention sink during pre-training can further improve streaming deployment. In streaming settings, StreamingLLM outperforms the sliding window recomputation baseline by up to 22.2x speedup. Code and datasets are provided at this https URL.

Authors: Guangxuan Xiao, Yuandong Tian, Beidi Chen, Song Han, Mike Lewis

Links:

Homepage: https://ykilcher.com

Merch: https://ykilcher.com/merch

YouTube: https://www.youtube.com/c/yannickilcher

Twitter: https://twitter.com/ykilcher

Discord: https://ykilcher.com/discord

LinkedIn: https://www.linkedin.com/in/ykilcher

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: https://www.subscribestar.com/yannickilcher

Patreon: https://www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

-

1:57:48

1:57:48

The Charlie Kirk Show

2 hours agoTrump Gets His Cabinet + Killing the USAID Grift + Why Bud Light Collapsed | Frericks | 2.4.2025

91.2K23 -

1:21:47

1:21:47

Simply Bitcoin

2 hours ago $1.05 earnedDid America JUST Change The Bitcoin Nation State Race Forever?! | EP 1175

12.5K -

37:13

37:13

Grant Stinchfield

2 hours ago $1.21 earnedBill Gates is Making the Media Rounds Today to Push the Vax and Stop RFK Jr.

8.02K12 -

DVR

DVR

TheAlecLaceShow

3 hours agoGuest: Rep. Burgess Owens | Trump Wins with Mexico & Canada | DNC Diversity | The Alec Lace Show

6.17K4 -

1:00:28

1:00:28

The Dan Bongino Show

4 hours agoTrump’s Most Important Fight To Date (Ep. 2415) - 02/04/2025

603K1K -

59:44

59:44

The Rubin Report

3 hours agoPress Gasps When Shown What USAID Spent Money On

77.3K112 -

1:15:21

1:15:21

Bare Knuckle Fighting Championship

4 hours agoThe Bare Knuckle Show with Brian Soscia

13.7K2 -

1:28:32

1:28:32

The Shannon Joy Show

4 hours ago🔥SHOCK Report - The COVID Dossier! A Coordinated Global Military Operation: Live EXCLUSIVE W/ Sasha Latypova & Debbie Lerman.🔥

12.8K2 -

1:57:04

1:57:04

Steven Crowder

5 hours agoUSAID Exposed: Everything You Need to Know Featuring Mike Benz

363K208 -

2:01:57

2:01:57

LFA TV

18 hours agoCLEANING HOUSE!! | LIVE FROM AMERICA 2.4.25 11am

66.1K19