Premium Only Content

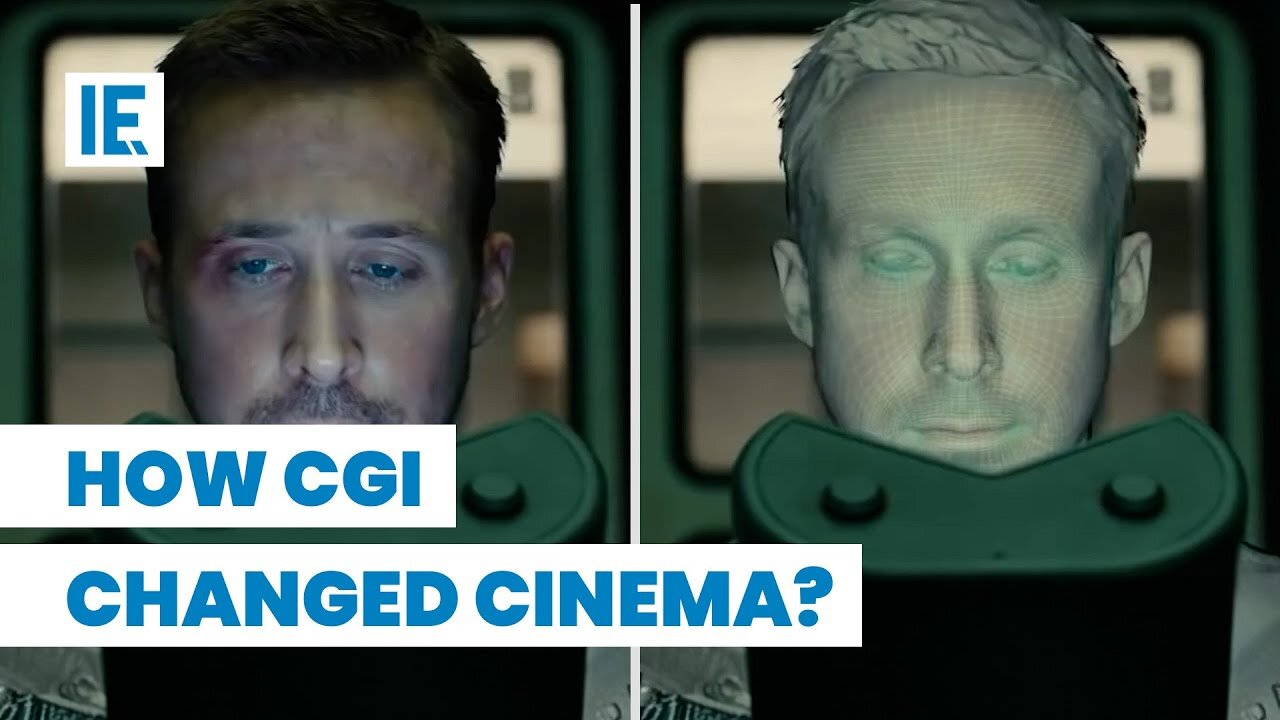

Is CGI Getting Worse?

The evolution of Computer-Generated Imagery (CGI) has revolutionized the film industry, enhancing visual storytelling by leaps and bounds. Despite a common misconception, CGI, when done right, can blend seamlessly with practical effects, blurring the boundary between the real and the computer-generated.

The use of CGI began modestly in 1958 with Alfred Hitchcock's "Vertigo", utilizing a WWII targeting computer and pendulum for its opening sequence's spiral effects. This laid the foundation for more sophisticated uses of CGI, such as in 1973's "Westworld", where the creators leveraged a technique inspired by Mariner 4's Mars imagery for a robotic perspective.

As technology advanced, films like 1982's "Tron" pushed the limits of visual effects, involving painstaking manual input of values for each animated object. The film failed to garner an Oscar due to the prejudiced view that using computers was 'cheating', highlighting the industry's initial resistance to CGI.

The advent of motion capturing technology in films such as "The Lord of the Rings", involving recording actors' movements via sensors, contributed to enhancing realism in CGI characters. CGI today is a complement rather than a replacement for practical effects, often used in compositing to blend live-action and CG elements for realistic scenes, as seen in 2021's "Dune".

Despite criticism, CGI is an essential filmmaking tool that has enriched modern storytelling, enabling visual effects artists to realistically render a filmmaker's vision on screen. CGI isn't taking away from cinema's magic, but rather adding to it, crafting experiences and stories beyond the limitations of practical effects.

Join our channel by clicking here: https://rumble.com/c/c-4163125

-

2:02:46

2:02:46

Slightly Offensive

7 hours ago $10.59 earnedSecurity DISASTER: Trump Admin LEAKS Bombing Plans to Atlantic Journo | Nightly Offensive

74.7K18 -

24:09

24:09

Producer Michael

10 hours agoWE’RE GIVING AWAY LUXURY WATCHES!! (NOT CLICKBAIT)

36.1K11 -

1:38:21

1:38:21

Redacted News

10 hours agoBREAKING! TRUMP THREATENS EGYPT WITH TWO CHOICES, BIOWEAPONS LABS IN MONTANA EXPOSED, JFK FILES

215K280 -

1:08:49

1:08:49

vivafrei

17 hours agoNational Security Text Leak? Major Violation or Atlantic Fake News? Bad Trump Pick for CDC? & MORE!

168K52 -

2:15:00

2:15:00

The Quartering

13 hours agoBOMBS Found At Tesla Dealer, Race HOAX Busted, Loans For Fast Food, Snow White Debacle & More!

310K364 -

34:10

34:10

Jamie Kennedy

6 hours agoCoincidence DOES NOT Exist - Matrix Shattering Moments | Ep. 198- Hate To Break It To Ya

33.2K15 -

1:12:05

1:12:05

Edge of Wonder

7 hours agoPyramid of Giza’s Mystery Hidden Beneath: Massive Structures Shatter Our History

44K10 -

55:09

55:09

LFA TV

1 day agoTrump Officials ‘Signal’ a Message to Europe | TRUMPET DAILY 3.25.25 7PM

41.5K2 -

11:46

11:46

Tundra Tactical

8 hours ago $1.21 earnedGEN Z Brit 3D Prints a WORKING Gun!

32.2K17 -

1:18:17

1:18:17

Awaken With JP

12 hours ago20 yrs in Prison for Tesla Terrorists, 5 yr Covidversary, and More! - LIES Ep 84

118K73