Premium Only Content

This video is only available to Rumble Premium subscribers. Subscribe to

enjoy exclusive content and ad-free viewing.

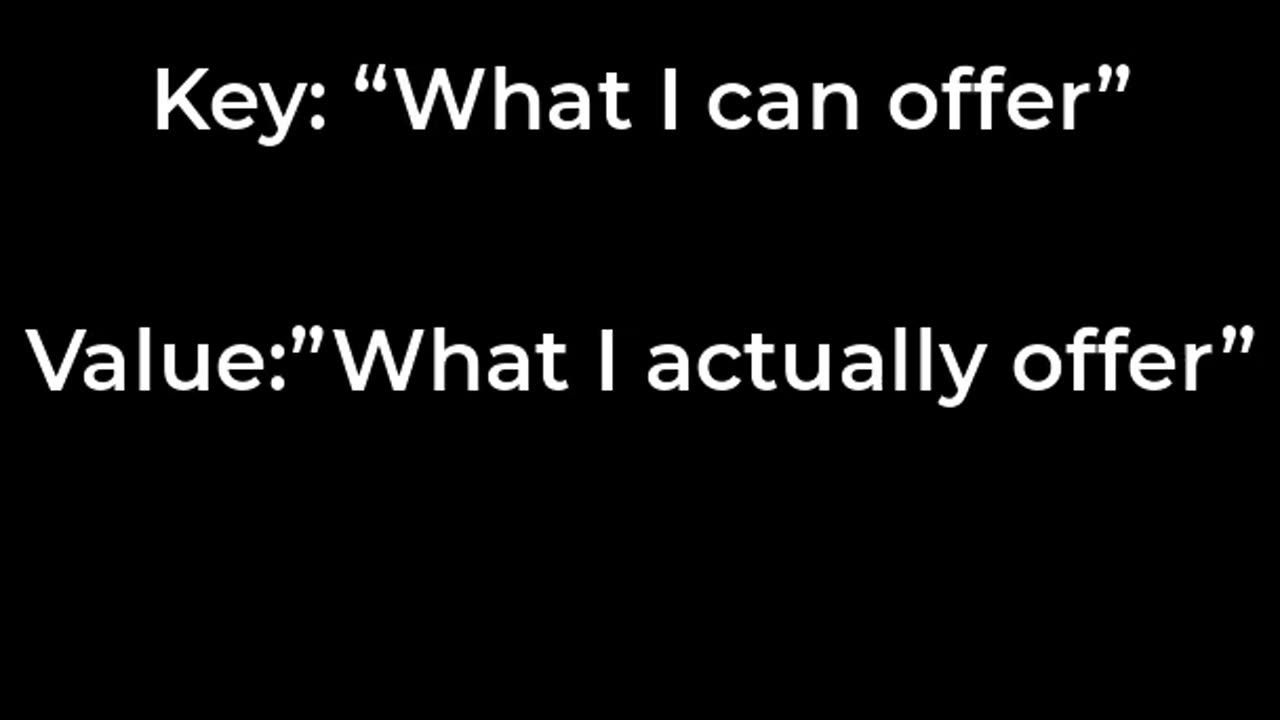

Understanding Query, Key and Value Vectors in Transformer Networks

1 year ago

34

This video provides an explanation of query, key and value vectors, which are an essential part of the attention mechanism used in transformer neural networks. Transformers use multi-headed attention to learn contextual relationships between input and output sequences. The attention mechanism calculates the relevance of one element to another based on query and key vectors. The value vectors then provide contextual information for the relevant elements. Understanding how query, key and value vectors work can help in designing and optimizing transformer models for various natural language processing and computer vision tasks.

Loading comments...

-

LIVE

LIVE

Steven Crowder

1 hour ago🔴 How USAID Spread Fake News with Your Tax Dollars & Trump Took Down Title IX

55,678 watching -

LIVE

LIVE

Matt Kohrs

9 hours agoMAX DEGEN TILT!!! (New Highs Incoming!) || The MK Show

2,235 watching -

LIVE

LIVE

Wendy Bell Radio

5 hours agoDOGE Just Ended The Deep State

15,420 watching -

34:23

34:23

BonginoReport

2 hours agoMedia Exposed as Government-Funded Propaganda Machine (Ep.134) - 02/06/2025

19.2K65 -

LIVE

LIVE

Vigilant News Network

15 hours agoThe Most Devastating COVID Jab Report So Far | The Daily Dose

1,198 watching -

1:29:45

1:29:45

Game On!

15 hours ago $3.08 earnedPresident Trump makes NFL HISTORY! Make the Super Bowl Great Again!

13K2 -

9:17

9:17

Dr. Nick Zyrowski

1 day ago4 Steps To Lose Fat Naturally Without Exercise

38.1K5 -

13:10

13:10

This Bahamian Gyal

15 hours agoLooking For A Job in 2025: 10 RED FLAGS To Watch Out For

23.8K6 -

17:41

17:41

IsaacButterfield

1 day ago $2.27 earnedInsane Woke TikTok Returns Crazier Than Ever!!

16K11 -

8:21

8:21

Mally_Mouse

13 hours agoPresident Trump - Week #2

12.3K18