Premium Only Content

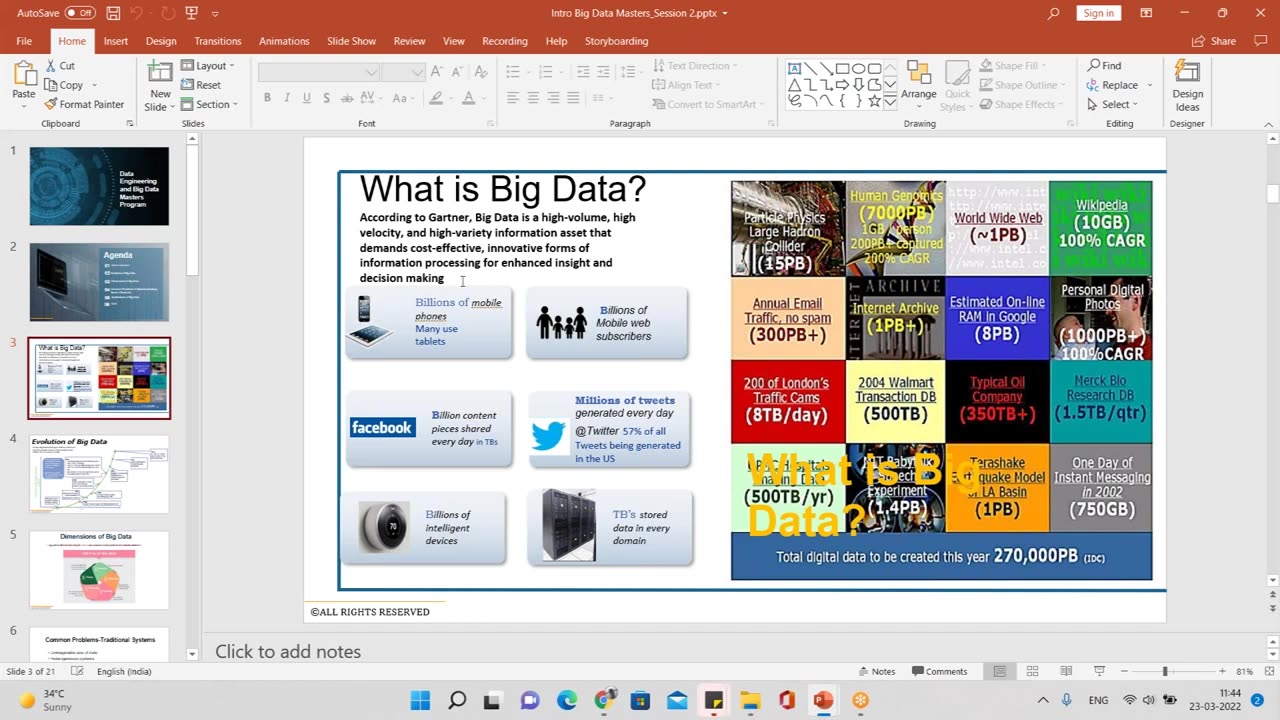

A Complete Overview on Pyspark Tutorial

PySpark, the Python library for Apache Spark, provides a powerful framework for big data processing and analytics. This tutorial aims to give you a brief overview of PySpark's capabilities.

PySpark allows you to distribute data across a cluster, enabling parallel processing and efficient handling of large datasets. It leverages the Spark SQL module for working with structured data, offering a high-level API for querying and manipulating data frames.

You can also perform advanced analytics using PySpark's machine learning library, MLlib. This library supports various algorithms for classification, regression, clustering, and recommendation systems.

Furthermore, PySpark seamlessly integrates with other Python libraries, such as Pandas and NumPy, allowing you to leverage their functionalities within Spark workflows.

By following this tutorial, you'll gain insights into PySpark's key components and learn how to write distributed data processing applications efficiently.

-

1:43:17

1:43:17

Benny Johnson

2 hours agoBREAKING: Mexico SURRENDERS To Trump, Locks Down Border | Mass FBI Firings, Deep State PURGE in DC

61.6K69 -

1:01:48

1:01:48

Grant Stinchfield

1 hour ago $0.89 earnedTrump's Tariffs are Already Working... Here's Why...

7.17K5 -

2:02:48

2:02:48

LFA TV

1 day agoTARIFF TRADE WAR! | LIVE FROM AMERICA 2.3.25 11am

52.9K20 -

LIVE

LIVE

The Dana Show with Dana Loesch

1 hour agoTRUMP IMPOSES TARIFFS ON IMPORTS FROM CANADA, CHINA & MEXICO | The Dana Show LIVE On Rumble!

629 watching -

LIVE

LIVE

Major League Fishing

5 days agoLIVE! - Bass Pro Tour: Stage 1 - Day 4

139 watching -

59:38

59:38

The Dan Bongino Show

6 hours agoTrump Is Setting The Old World Order Ablaze (Ep. 2414) - 02/03/2025

620K867 -

1:02:10

1:02:10

The Rubin Report

3 hours agoJD Vance Makes Host Go Quiet with This Brutal Warning for These Major Countries

82.1K55 -

2:00:51

2:00:51

Steven Crowder

5 hours agoWhy Trump & America Will Dominate the Global Trade War

407K214 -

1:06:15

1:06:15

vivafrei

16 hours agoLive with "Bitcoin Jesus" Roger Ver - the Indictment, Law-Fare and the War on Crypto

93.4K10 -

DVR

DVR

Bannons War Room

1 year agoWarRoom Live

111M