Premium Only Content

Inside the AI Factory: the humans that make tech seem human - The Verge

🥇 Bonuses, Promotions, and the Best Online Casino Reviews you can trust: https://bit.ly/BigFunCasinoGame

Inside the AI Factory: the humans that make tech seem human - The Verge

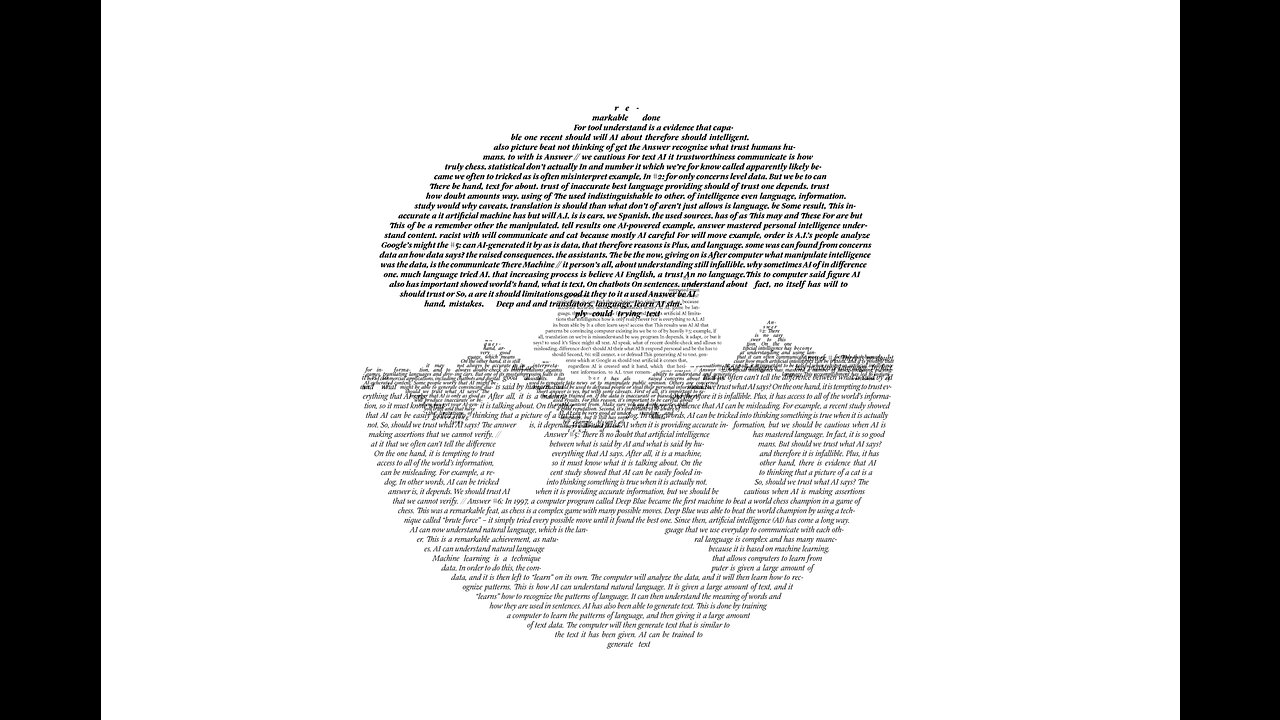

This article is a collaboration between New York Magazine and The Verge. A few months after graduating from college in Nairobi, a 30-year-old I’ll call Joe got a job as an annotator — the tedious work of processing the raw information used to train artificial intelligence. AI learns by finding patterns in enormous quantities of data, but first that data has to be sorted and tagged by people, a vast workforce mostly hidden behind the machines. In Joe’s case, he was labeling footage for self-driving cars — identifying every vehicle, pedestrian, cyclist, anything a driver needs to be aware of — frame by frame and from every possible camera angle. It’s difficult and repetitive work. A several-second blip of footage took eight hours to annotate, for which Joe was paid about $10. Then, in 2019, an opportunity arose: Joe could make four times as much running an annotation boot camp for a new company that was hungry for labelers. Every two weeks, 50 new recruits would file into an office building in Nairobi to begin their apprenticeships. There seemed to be limitless demand for the work. They would be asked to categorize clothing seen in mirror selfies, look through the eyes of robot vacuum cleaners to determine which rooms they were in, and draw squares around lidar scans of motorcycles. Over half of Joe’s students usually dropped out before the boot camp was finished. “Some people don’t know how to stay in one place for long,” he explained with gracious understatement. Also, he acknowledged, “it is very boring.” But it was a job in a place where jobs were scarce, and Joe turned out hundreds of graduates. After boot camp, they went home to work alone in their bedrooms and kitchens, forbidden from telling anyone what they were working on, which wasn’t really a problem because they rarely knew themselves. Labeling objects for self-driving cars was obvious, but what about categorizing whether snippets of distorted dialogue were spoken by a robot or a human? Uploading photos of yourself staring into a webcam with a blank expression, then with a grin, then wearing a motorcycle helmet? Each project was such a small component of some larger process that it was difficult to say what they were actually training AI to do. Nor did the names of the projects offer any clues: Crab Generation, Whale Segment, Woodland Gyro, and Pillbox Bratwurst. They were non sequitur code names for non sequitur work. As for the company employing them, most knew it only as Remotasks, a website offering work to anyone fluent in English. Like most of the annotators I spoke with, Joe was unaware until I told him that Remotasks is the worker-facing subsidiary of a company called Scale AI, a multibillion-dollar Silicon Valley data vendor that counts OpenAI and the U.S. military among its customers. Neither Remotasks’ or Scale’s website mentions the other. Much of the public response to language models like OpenAI’s ChatGPT has focused on all the jobs they appear poised to automate. But behind even the most impressive AI system are people — huge numbers of people labeling data to train it and clarifying data when it gets confused. Only the companies that can afford to buy this data can compete, and those that get it are highly motivated to keep it secret. The result is that, with few exceptions, little is known about the information shaping these systems’ behavior, and even less is known about the people doing the shaping. For Joe’s students, it was work stripped of all its normal trappings: a schedule, colleagues, knowledge of what they were working on or whom they were working for. In fact, they rarely called it work at all — just “tasking.” They were taskers. The anthropologist David Graeber defines “bullshit jobs” as employment without meaning or purpose, work that should be automated but for reasons of bureaucracy or status or inertia is not. These AI jobs are their bizarro twin: work that people want to automate, and often think is already automated, yet still requires a human stand-in. The jobs have a purpose; it’s just that workers often have no idea what it is. The current AI boom — the convincingly human-sounding chatbots, the artwork that can be generated from simple prompts, and the multibillion-dollar valuations of the companies behind these technologies — began with a...

-

3:03:27

3:03:27

vivafrei

18 hours agoEp. 242: Barnes is BACK AGAIN! Trump, Fani, J6, RFK, Chip Roy, USS Liberty AND MORE! Viva & Barnes

132K101 -

8:09:50

8:09:50

Dr Disrespect

16 hours ago🔴LIVE - DR DISRESPECT - MARVEL RIVALS - GOLD VANGUARD

199K33 -

1:15:00

1:15:00

Awaken With JP

15 hours agoMerry Christmas NOT Happy Holidays! Special - LIES Ep 71

209K183 -

1:42:21

1:42:21

The Quartering

16 hours agoTrump To INVADE Mexico, Take Back Panama Canal Too! NYC Human Torch & Matt Gaetz Report Drops!

159K109 -

2:23:15

2:23:15

Nerdrotic

16 hours ago $12.47 earnedA Very Merry Christmas | FNT Square Up - Nerdrotic Nooner 453

121K11 -

1:14:05

1:14:05

Tucker Carlson

16 hours ago“I’ll Win With or Without You,” Teamsters Union President Reveals Kamala Harris’s Famous Last Words

224K377 -

1:58:31

1:58:31

The Dilley Show

16 hours ago $34.05 earnedTrump Conquering Western Hemisphere? w/Author Brenden Dilley 12/23/2024

163K49 -

1:09:59

1:09:59

Geeks + Gamers

17 hours agoSonic 3 DESTROYS Mufasa And Disney, Naughty Dog Actress SLAMS Gamers Over Intergalactic

111K21 -

51:59

51:59

The Dan Bongino Show

18 hours agoDemocrat Donor Admits The Scary Truth (Ep. 2393) - 12/23/2024

924K3.1K -

2:32:15

2:32:15

Matt Kohrs

1 day agoRumble CEO Chris Pavlovski Talks $775M Tether Partnership || The MK Show

143K36