Premium Only Content

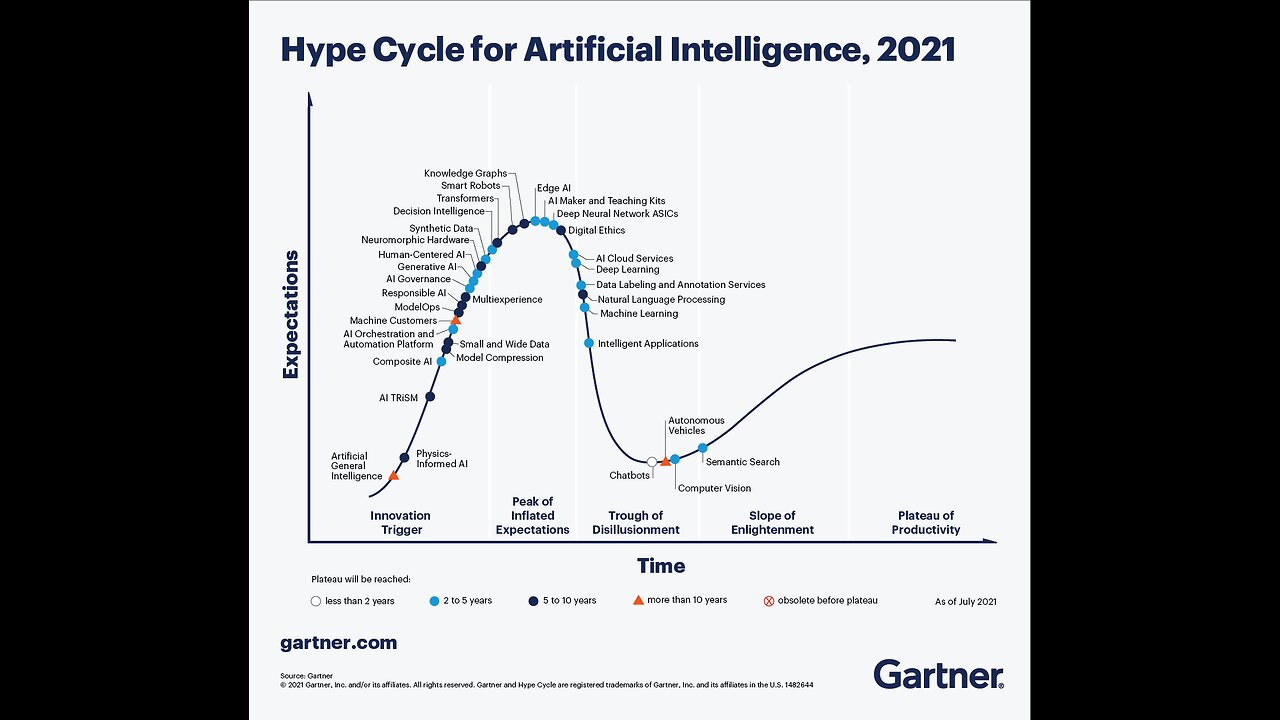

The AI Hype Cycle Is Distracting Companies - HBR.org Daily

🥇 Bonuses, Promotions, and the Best Online Casino Reviews you can trust: https://bit.ly/BigFunCasinoGame

The AI Hype Cycle Is Distracting Companies - HBR.org Daily

Machine learning has an “AI” problem. With new breathtaking capabilities from generative AI released every several months — and AI hype escalating at an even higher rate — it’s high time we differentiate most of today’s practical ML projects from those research advances. This begins by correctly naming such projects: Call them “ML,” not “AI.” Including all ML initiatives under the “AI” umbrella oversells and misleads, contributing to a high failure rate for ML business deployments. For most ML projects, the term “AI” goes entirely too far — it alludes to human-level capabilities. In fact, when you unpack the meaning of “AI,” you discover just how overblown a buzzword it is: If it doesn’t mean artificial general intelligence, a grandiose goal for technology, then it just doesn’t mean anything at all. You might think that news of “major AI breakthroughs” would do nothing but help machine learning’s (ML) adoption. If only. Even before the latest splashes — most notably OpenAI’s ChatGPT and other generative AI tools — the rich narrative about an emerging, all-powerful AI was already a growing problem for applied ML. That’s because for most ML projects, the buzzword “AI” goes too far. It overly inflates expectations and distracts from the precise way ML will improve business operations. Most practical use cases of ML — designed to improve the efficiencies of existing business operations — innovate in fairly straightforward ways. Don’t let the glare emanating from this glitzy technology obscure the simplicity of its fundamental duty: the purpose of ML is to issue actionable predictions, which is why it’s sometimes also called predictive analytics. This means real value, so long as you eschew false hype that it is “highly accurate,” like a digital crystal ball. This capability translates into tangible value in an uncomplicated manner. The predictions drive millions of operational decisions. For example, by predicting which customers are most likely to cancel, a company can provide those customers incentives to stick around. And by predicting which credit card transactions are fraudulent, a card processor can disallow them. It’s practical ML use cases like those that deliver the greatest impact on existing business operations, and the advanced data science methods that such projects apply boil down to ML and only ML. Here’s the problem: Most people conceive of ML as “AI.” This is a reasonable misunderstanding. But “AI” suffers from an unrelenting, incurable case of vagueness — it is a catch-all term of art that does not consistently refer to any particular method or value proposition. Calling ML tools “AI” oversells what most ML business deployments actually do. In fact, you couldn’t overpromise more than you do when you call something “AI.” The moniker invokes the notion of artificial general intelligence (AGI), software capable of any intellectual task humans can do. This exacerbates a significant problem with ML projects: They often lack a keen focus on their value — exactly how ML will render business processes more effective. As a result, most ML projects fail to deliver value. In contrast, ML projects that keep their concrete operational objective front and center stand a good chance of achieving that objective. What Does AI Actually Mean? “‘AI-powered’ is tech’s meaningless equivalent of ‘all natural.’” –Devin Coldewey, TechCrunch AI cannot get away from AGI for two reasons. First, the term “AI” is generally thrown around without clarifying whether we’re talking about AGI or narrow AI, a term that essentially means practical, focused ML deployments. Despite the tremendous differences, the boundary between them blurs in common rhetoric and software sales materials. Second, there’s no satisfactory way to define AI besides AGI. Defining “AI” as something other than AGI has become a research challenge unto itself, albeit a quixotic one. If it doesn’t mean AGI, it doesn’t mean anything — other suggested definitions either fail to qualify as “intelligent” in the ambitious spirit implied by “AI” or fail to establish an objective goal. We face this conundrum whether trying to pinpoint 1) a definition for “AI,” 2) the criteria by which a computer would qualify as “intelligent,” or 3) a performance benchmark that would certify true AI. These three are one and t...

-

1:05:19

1:05:19

Sarah Westall

14 hours agoDying to Be Thin: Ozempic & Obesity, Shedding Massive Weight Safely Using GLP-1 Receptors, Dr. Kazer

110K26 -

54:38

54:38

LFA TV

1 day agoThe Resistance Is Gone | Trumpet Daily 12.26.24 7PM EST

75K12 -

58:14

58:14

theDaily302

23 hours agoThe Daily 302- Tim Ballard

71K13 -

13:22

13:22

Stephen Gardner

16 hours ago🔥You'll NEVER Believe what Trump wants NOW!!

119K347 -

54:56

54:56

Digital Social Hour

1 day ago $12.53 earnedDOGE, Deep State, Drones & Charlie Kirk | Donald Trump Jr.

67.9K6 -

DVR

DVR

The Trish Regan Show

18 hours agoTrump‘s FCC Targets Disney CEO Bob Iger Over ABC News Alleged Misconduct

71.6K44 -

1:48:19

1:48:19

The Quartering

18 hours agoElon Calls White People Dumb, Vivek Calls American's Lazy & Why Modern Christmas Movies Suck!

151K114 -

2:08:42

2:08:42

The Dilley Show

19 hours ago $37.76 earnedH1B Visa Debate, Culture and More! w/Author Brenden Dilley 12/26/2024

129K44 -

4:55:59

4:55:59

LumpyPotatoX2

22 hours agoThirsty Thursday on BOX Day - #RumbleGaming

116K9 -

1:04:52

1:04:52

Geeks + Gamers

20 hours agoDisney RATIO'D on Christmas Day | Mufasa Embarrassed By Sonic 3

85.1K13