Premium Only Content

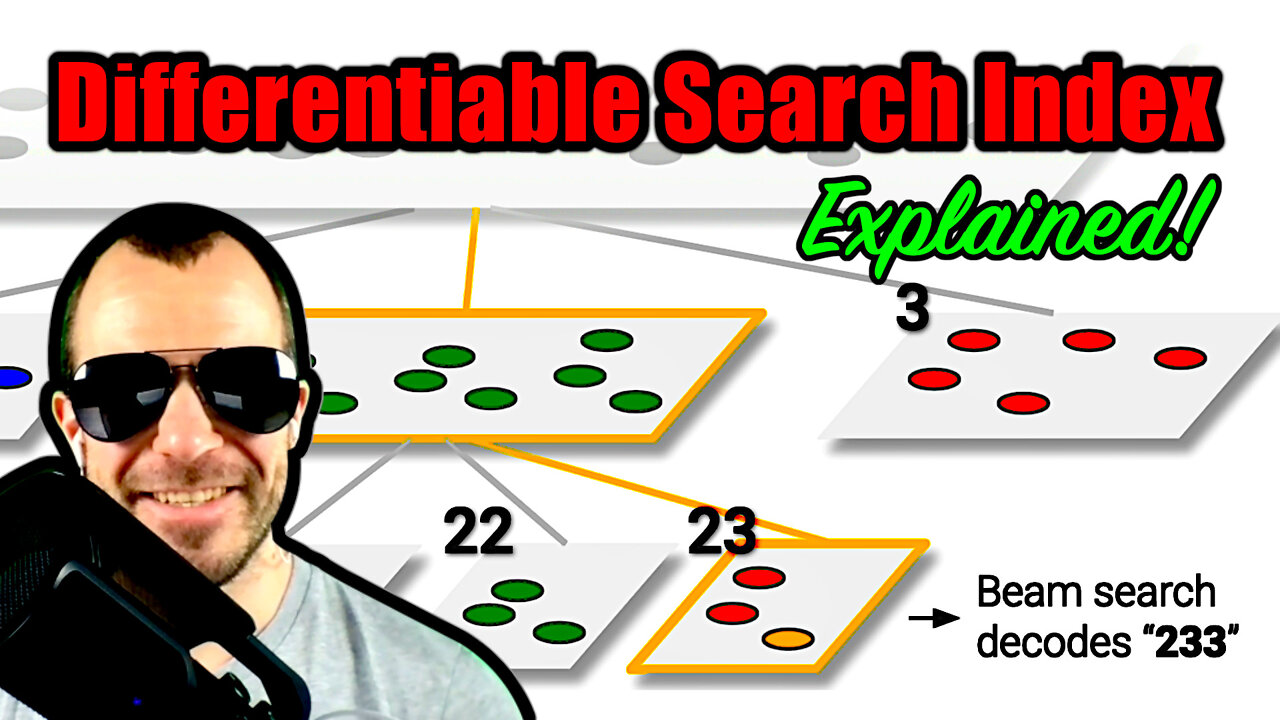

Transformer Memory as a Differentiable Search Index (Machine Learning Research Paper Explained)

#dsi #search #google

Search engines work by building an index and then looking up things in it. Usually, that index is a separate data structure. In keyword search, we build and store reverse indices. In neural search, we build nearest-neighbor indices. This paper does something different: It directly trains a Transformer to return the ID of the most relevant document. No similarity search over embeddings or anything like this is performed, and no external data structure is needed, as the entire index is essentially captured by the model's weights. The paper experiments with various ways of representing documents and training the system, which works surprisingly well!

Sponsor: Diffgram

https://diffgram.com?ref=yannic

OUTLINE:

0:00 - Intro

0:45 - Sponsor: Diffgram

1:35 - Paper overview

3:15 - The search problem, classic and neural

8:15 - Seq2seq for directly predicting document IDs

11:05 - Differentiable search index architecture

18:05 - Indexing

25:15 - Retrieval and document representation

33:25 - Training DSI

39:15 - Experimental results

49:25 - Comments & Conclusions

Paper: https://arxiv.org/abs/2202.06991

Abstract:

In this paper, we demonstrate that information retrieval can be accomplished with a single Transformer, in which all information about the corpus is encoded in the parameters of the model. To this end, we introduce the Differentiable Search Index (DSI), a new paradigm that learns a text-to-text model that maps string queries directly to relevant docids; in other words, a DSI model answers queries directly using only its parameters, dramatically simplifying the whole retrieval process. We study variations in how documents and their identifiers are represented, variations in training procedures, and the interplay between models and corpus sizes. Experiments demonstrate that given appropriate design choices, DSI significantly outperforms strong baselines such as dual encoder models. Moreover, DSI demonstrates strong generalization capabilities, outperforming a BM25 baseline in a zero-shot setup.

Authors: Yi Tay, Vinh Q. Tran, Mostafa Dehghani, Jianmo Ni, Dara Bahri, Harsh Mehta, Zhen Qin, Kai Hui, Zhe Zhao, Jai Gupta, Tal Schuster, William W. Cohen, Donald Metzler

Links:

Merch: store.ykilcher.com

TabNine Code Completion (Referral): http://bit.ly/tabnine-yannick

YouTube: https://www.youtube.com/c/yannickilcher

Twitter: https://twitter.com/ykilcher

Discord: https://discord.gg/4H8xxDF

BitChute: https://www.bitchute.com/channel/yann...

LinkedIn: https://www.linkedin.com/in/ykilcher

BiliBili: https://space.bilibili.com/2017636191

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar: https://www.subscribestar.com/yannick...

Patreon: https://www.patreon.com/yannickilcher

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

-

1:21:39

1:21:39

The Officer Tatum

2 hours agoLIVE: Kamala's "DRUNKEN" Rant, The Redskins are COMING Back, and More! | OT Show EP 16

6.13K13 -

LIVE

LIVE

Melonie Mac

2 hours agoGo Boom Live Ep 30!

463 watching -

LIVE

LIVE

Film Threat

6 hours agoMOANA 2 + TURKEY DAY HOLIDAY MOVIE JAMBOREE! | Hollywood on the Rocks

490 watching -

20:35

20:35

Silver Dragons

2 hours agoBullion Dealer Reveals PERFECT STORM for Silver

3042 -

52:20

52:20

The Quartering

4 hours agoKamala Harris Has Drunken Unhinged Rant & Joe Biden Just Robbed America

94.2K45 -

![Days Gone: Old Country Road - Part 3 [PC] | Rumble Gaming](https://1a-1791.com/video/s8/1/Q/q/G/_/QqG_u.0kob-small-Days-Gone-Old-Country-Road-.jpg) LIVE

LIVE

NeoX5

5 hours agoDays Gone: Old Country Road - Part 3 [PC] | Rumble Gaming

350 watching -

LIVE

LIVE

CAMELOT331

4 hours agoRide N' Die 24 Hour LAUNCH Ft Ethan Van Sciver, Cecil, ItsaGundam, That Star Wars Girl, Shane & MORE

312 watching -

12:53

12:53

DeVory Darkins

18 hours ago $11.37 earnedKamala Intoxicated Video Message to Staff Shocks Internet

21.6K62 -

Mally_Mouse

5 hours agoLet's Yap About It - LIVE!

30.5K1 -

21:59

21:59

Midnight's Edge

1 day agoElon Musk calls out Norway for destroying its own future!

16.3K5